April 25, 2024

We all know that AI continues to be a driving force across the globe, in the lives of consumers as well as businesses. AI is everywhere. It seems you can’t log into an app now without seeing a “New update! Now with AI!” blast on your screen. While some is a gimmick (Why is AI on Uber Eats?!), there’s vast potential for AI to assist our working lives, especially when it comes to content creation and moderation.

We already know AI is used for moderating and creating content, that’s nothing new. The challenge at hand is understanding how to use this AI in this manner responsibly — and strategically. The potential of AI is vast, but responsible innovation and safeguards are crucial, and world leaders are taking note.

US president Joe Biden signed an executive order on the safe, secure and trustworthy use of artificial intelligence. And just recently the Council of the European Union and European Parliament reached a provisional agreement on the Artificial Intelligence Act — the world’s first comprehensive AI regulation.

Ensuring transparency about use of AI is paramount. It’s crucial to retain human oversight, ensuring that AI remains a supportive tool rather than taking complete control, especially when it comes to using AI for content creation and moderation.

With that in mind, we’re going to explore findings from our own research and discuss the guiding principles that ensure that your use of AI is responsible and brand-safe.

The role of AI in content creation

In short, content moderation involves screening and removing any content that goes against set deadlines, and AI can improve this process.

Here we’ll walk through how you can use AI to streamline your content supply chain, all the while ensuring responsible and considerate implementation.

Content moderation before AI

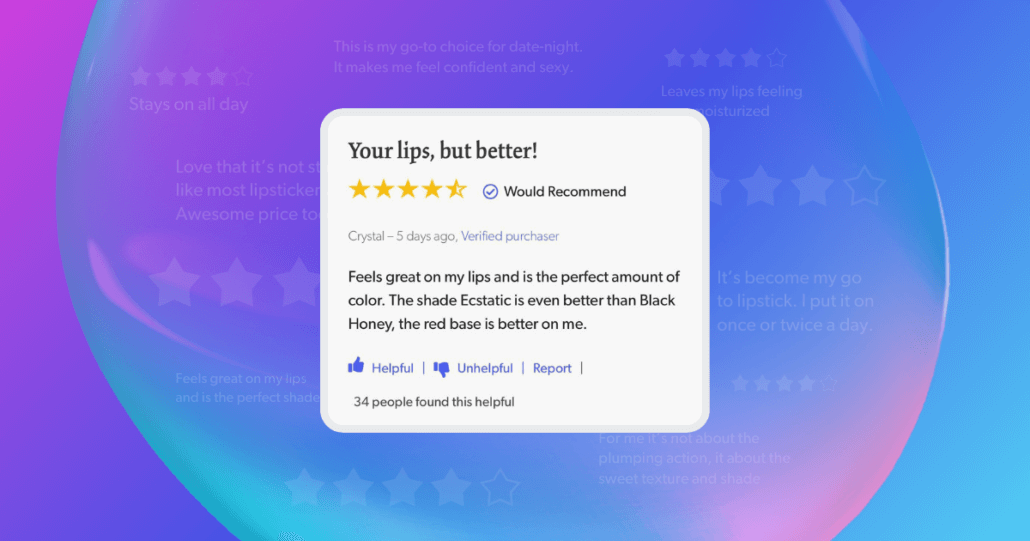

Consumers love to contribute reviews about your brand. This user-generated content (UGC) goldmine fosters trust and drives sales by making connections between you and your audience.

But this content won’t always necessarily align with your brand values. It might contain inappropriate language, prohibited content, reveal personal information, or even aim to manipulate perceptions of your brand, undermining that trust you’ve cultivated.

All of your clients likely have varying standards for what constitutes acceptable content. For instance, a beer company might embrace discussions about alcohol, while a children’s brand probably won’t. Or hopefully won’t, anyway.

Before AI was on the scene, there were different content moderation solutions. Specifically, a manual approach.

A manual approach requires human moderators to review client preferences within a content management system, deciding whether to approve or reject each piece of content. Initially, this was the method we used at Bazaarvoice.

Results were generally fine. Human moderators ensure content appropriateness and authenticity. But it’s seriously time-consuming. On average it takes twenty hours before a review goes live. This goes against consumer preference for content recency, who scan the latest reviews for up-to-date product information.

Also there’s the fact that engaged consumers expected their voices to be swiftly acknowledged. Delays in posting reviews can lead to a loss of engagement with your brand. This presents a core challenge: how can you ensure rapid, on-site content availability, while still upholding authenticity?

How to use AI in content moderation

The answer? By leveraging machine learning — a branch of AI that learns from existing data to derive patterns. For example, At Bazaarvoice, we possess massive amounts of data, with over 800 million unique review contents historically, growing by 9 million each month.

Data like this can be used to train machine learning models to identify undesirable content and automatically flag any new content matching that profile. If you’re getting started with AI content moderation, follow these steps:

- Engage existing moderators to label data for model training and validation

- Data scientists utilize this labeled data to train models tailored to one or more client use cases

- Deploy these models to a machine learning inference system, for it to approve or reject new content collected for your clients

- Share client configurations with the AI machine learning system so that the content can be moderated to meet each client’s individual use case

Consider the evolving landscape too. Consumer behaviors change, new trends emerge, and language evolves. What if AI tech can’t adapt to this? You’re risking brand trust and consumer safety, and no one wants that. Look at review bombing, for example, which usually happens in response to social or political dynamics that are near-impossible to predict.

Content operations team can respond to these events though by training new models, adjusting existing ones, or fine tuning the client configurations to ensure only appropriate content, appropriate authentic content ends up on-site

AI should augment human effort, not replace it

This approach ensures that humans remain in charge. Clients specify what kind of content they consider appropriate or not, ensuring the AI only acts within their predefined parameters.

A responsible AI approach means better results for clients too. Currently, we moderate 73% of UGC with machine learning models, providing clients with filtered UGC tailored to their needs within seconds, not hours. A huge improvement on the hours required with solely-human moderators!

Responsible AI content creation for brands

Most brands tend to take the initiative to craft their own content, they don’t rely only on UGC. Specifically, they carefully strategize the images, messages, and products they intend to highlight across their social media platforms, websites, and other channels.

But creating all this content is time consuming. Most companies employ social media managers or similar dedicated to this task. Imagine if you could combine the skill of a social media manager with the utility of AI to automatically generate content. It sounds pretty remarkable, like mind reading even.

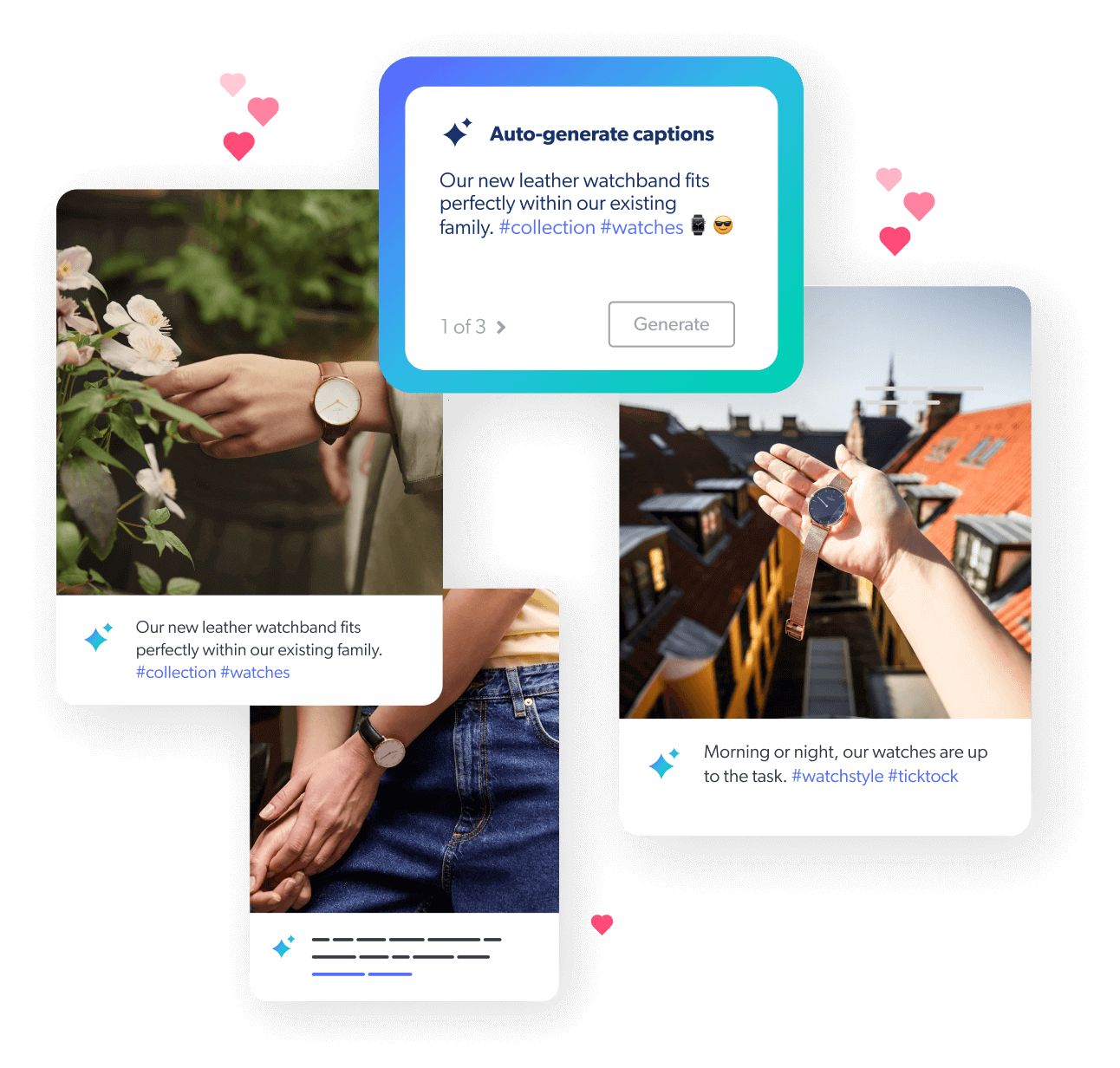

When clients onboard with Bazaarvoice, they link their social media accounts, providing us with ample data points showcasing their preferred topics and communication style.

When a user selects an image, our machine learning algorithms decipher its contents, telling us what they want to post about. We can also glean information about the products they aim to showcase from product tag data and learn their communication style through their social media history.

After clicking on “auto generate caption,” these data points undergo processing by our (cutting edge!) generative AI to craft a caption about the image and products in the client’s voice. The social media manager can then approve, refine, or reject the messaging.

It creates an incredible, symbiotic relationship The tool combines the convenience of generative AI, while retaining the authentic voice of the client, allowing for revisions as needed. Once again, the AI acts as an assistant rather than a replacement.

In practice, many clients tend to tweak the suggested messaging, but they still appreciate how this feature jumpstarts their creative process. It’s akin to having a muse — inspiring human creativity based on past elements.

We introduced this product on our social commerce platform last year and it was an instant hit. Which got us thinking, can we do the same thing for our consumers?

Responsible AI creation for consumers

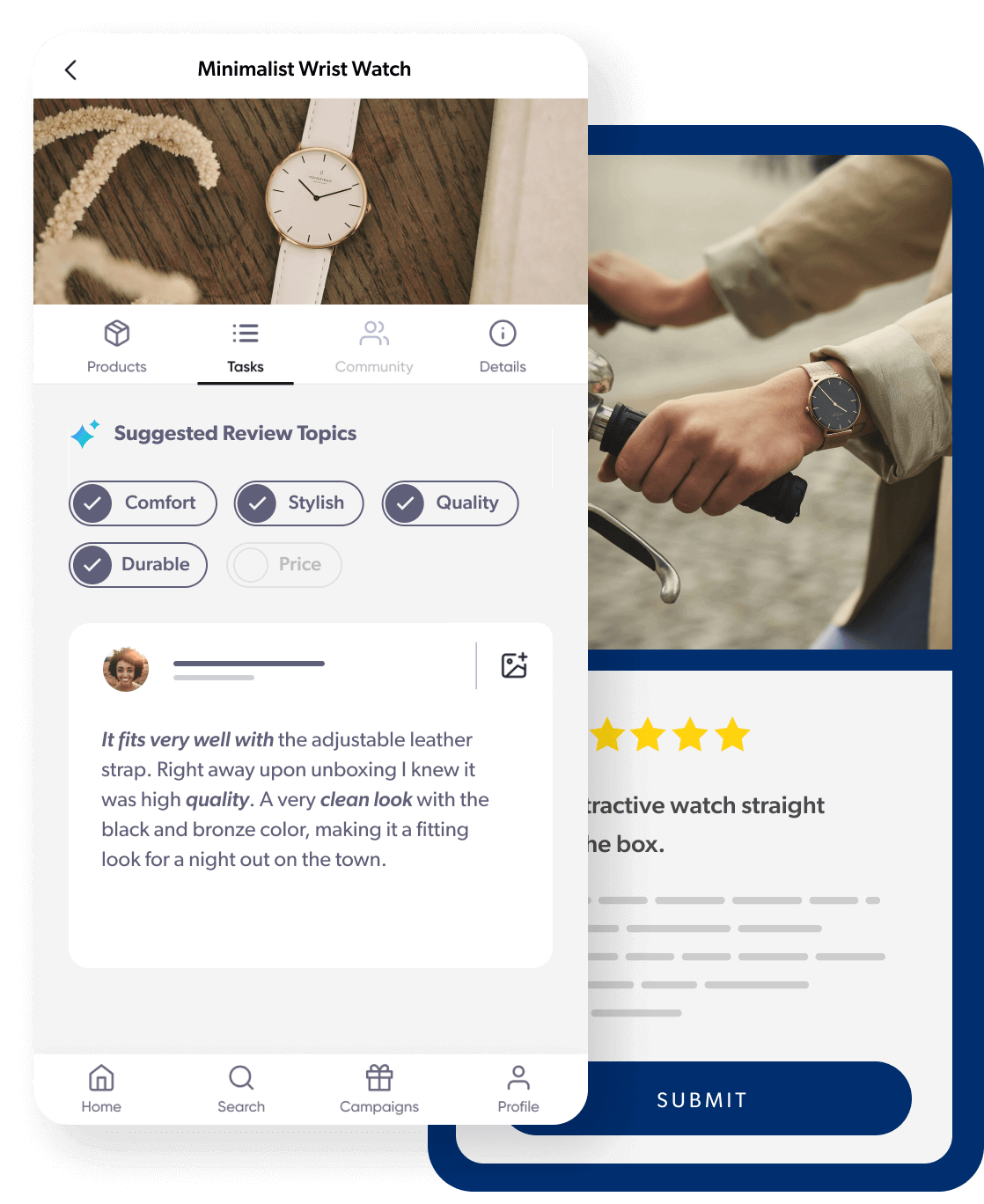

There’s an issue with customer reviews. According to our research, 68% of consumers feel uncertain about what to include in a review, meaning many end up lacking detail or veering way, way off-topic.

Like with content moderation, can AI step in to assist consumers in crafting better, more informative reviews? This would help shopping become more transparent, because the more reviews we have, the more informed we are.

For example, let’s look at how Bazaarvoice Content Coach works. First, AI ideates which topics would be useful to include in a review, based on each product and our client’s catalog. These topics are then presented to the consumer. As the consumer writes, the system highlights the topics they’ve addressed.

What’s great about this approach is its blend of convenience and enjoyment. It guides users to write helpful reviews while making the process fun! Or at least as fun as writing a product review can be. It’s like a form of AI content moderation, but reversed. It functions as a coach rather than a ghostwriter, empowering users to refine their reviews.

Since its launch, Content Coach has facilitated the creation of almost 400,000 authentic reviews, with nearly 87% of users finding it beneficial — a testament to how AI can be used to assist content creation, not own.

Take AI assistance even further

These are all prime examples of leveraging generative AI in a responsible (but beneficial) manner. They enhance the consumer experience while maintaining authenticity, effectively coaching brands and consumers to maximize their review’s impact.

Examples like above illustrate how an AI content moderation strategy augments human efforts responsibly and optimizes your content supply chain. We’re only scratching the surface here though. Discover the transformative potential of AI in authentically shaping your content strategy with our on-demand masterclass: How to use AI strategically and responsibly.